How to Improve Dedicated Server Uptime and Reliability

Dedicated servers play a crucial role in the infrastructure of many businesses and organizations. To ensure these systems operate effectively, it is essential to focus on enhancing their uptime and reliability. Uptime refers to the duration a server is operational and accessible, while reliability signifies its capacity to function without interruptions. Both factors are vital for maintaining seamless service delivery.

One of the primary contributors to server downtime is hardware failure. Aging components, inadequate cooling systems, and power supply issues can lead to significant disruptions. Regular maintenance, including timely upgrades and component checks, can help mitigate these risks. For instance, implementing a preventive maintenance schedule allows for the early identification of potential hardware issues, ensuring that all components are functioning optimally.

In addition to hardware, software bugs and configuration errors can cause severe interruptions. Keeping software up to date and conducting routine configuration audits are essential practices for maintaining stability. Moreover, utilizing load balancers can distribute incoming traffic across multiple servers, preventing any single server from becoming overwhelmed and enhancing overall availability.

Network performance is another critical aspect of server reliability. A robust network infrastructure minimizes downtime and ensures consistent server performance. Implementing failover solutions can automatically switch to a backup server in the event of a primary server failure, thus maintaining operational continuity.

Effective monitoring and alerting systems are also indispensable for maintaining server uptime. Real-time performance monitoring tools track metrics such as CPU usage and memory consumption, allowing administrators to address issues proactively. Setting up alerts for critical performance thresholds ensures immediate notification of potential problems, facilitating rapid intervention.

Regular backups and a solid disaster recovery plan are essential for protecting data and maintaining business continuity. Automated backup solutions ensure that critical information is consistently saved, enabling quick restoration in case of data loss. Additionally, regularly testing disaster recovery plans guarantees that organizations can efficiently recover from failures, minimizing downtime.

Choosing the right hosting provider is equally important. Evaluating provider uptime guarantees, which typically range from 99.9% to 99.999%, helps assess potential service reliability. Furthermore, robust customer support and clear service level agreements (SLAs) are crucial for addressing issues promptly, ensuring quick resolutions to any downtime.

In conclusion, improving dedicated server uptime and reliability requires a comprehensive approach. By implementing regular maintenance, effective monitoring, and strategic planning, organizations can ensure consistent performance and service availability. These practices not only enhance operational efficiency but also foster a resilient server infrastructure capable of meeting the demands of modern business environments.

Understanding Server Uptime and Reliability

Server uptime is a critical metric that quantifies the time a server is operational and accessible to users. It is often expressed as a percentage, representing the ratio of operational time to total time. For instance, a server that has an uptime of 99.9% is down for only about 8.76 hours annually. In contrast, reliability refers to the server’s ability to perform its functions consistently without failure. While uptime measures availability, reliability assesses stability and performance over time. Both factors are essential for ensuring seamless service delivery in any organization.

Understanding the significance of uptime and reliability is crucial in today’s digital landscape. Businesses depend heavily on their servers for various operations, from hosting websites to managing databases. When these servers experience downtime, it can lead to significant financial losses, decreased customer satisfaction, and damage to brand reputation. Research indicates that even a minute of downtime can cost companies thousands of dollars, underscoring the importance of maintaining high uptime and reliability.

To enhance server uptime and reliability, organizations can adopt several strategies. First, implementing preventive maintenance practices can help identify and resolve potential issues before they escalate. This includes regular hardware inspections, software updates, and system audits. For example, a study published in the Journal of Network and Systems Management highlighted that routine maintenance reduced server failures by up to 30% in managed IT environments.

Another effective strategy is utilizing redundant systems. By incorporating redundant hardware configurations, such as RAID (Redundant Array of Independent Disks) and dual power supplies, organizations can ensure that if one component fails, another can take over without service interruption. This redundancy is vital for mission-critical applications where downtime is not an option.

Moreover, load balancing is an essential technique for distributing network traffic across multiple servers. This approach not only prevents any single server from becoming overwhelmed but also enhances overall system performance. A study in the International Journal of Computer Applications demonstrated that load balancing could improve server response times by up to 50%, significantly enhancing user experience.

Finally, implementing robust monitoring and alerting systems is crucial for maintaining server uptime. Tools that provide real-time performance metrics allow administrators to detect anomalies early and take corrective action. Configuring alerts for critical thresholds ensures that issues are addressed promptly, reducing the likelihood of unexpected downtime.

In conclusion, understanding and improving server uptime and reliability is essential for any organization reliant on digital infrastructure. By employing preventive maintenance, redundant systems, load balancing, and effective monitoring, businesses can enhance their operational efficiency and service availability. These strategies not only protect against potential losses but also foster a more resilient IT environment.

Common Causes of Server Downtime

Server downtime can be a critical issue for businesses and organizations that rely on constant access to their data and applications. Understanding the underlying factors that contribute to server outages is essential for implementing effective solutions. The most prevalent causes of server downtime include hardware failures, software bugs, network issues, and human error. Each of these factors can severely disrupt server operations, leading to significant financial and reputational losses.

Hardware Failures

Hardware failures are one of the most common causes of server downtime. These failures can arise from various issues, including aging components, inadequate cooling, and power supply problems. For instance, a study published in the Journal of Computer Science highlighted that nearly 30% of server outages are attributed to hardware malfunctions. Regular maintenance, including timely upgrades and component replacements, is crucial in mitigating these risks. Implementing a preventive maintenance schedule can identify potential hardware issues before they escalate, ensuring optimal function and reducing unexpected failures.

Software and Configuration Issues

Software bugs and misconfigurations can also lead to significant downtime. A report from the International Journal of Information Technology found that approximately 25% of server downtime incidents are caused by software-related issues. Regular updates and configuration audits are vital for maintaining software stability and performance. For example, organizations should ensure that all applications are updated to the latest versions to protect against known vulnerabilities.

Network Reliability

Network issues can severely impact server uptime. A robust network infrastructure is essential for minimizing downtime. Load balancers, for instance, distribute incoming traffic across multiple servers, preventing any single server from becoming overwhelmed. This ensures higher availability and reliability. Additionally, implementing failover solutions can automatically switch to a backup server in case of primary server failure, providing continuity of service.

Human Error

Human error is another significant contributor to server downtime, accounting for about 20% of outages according to a study by the Institute of Electrical and Electronics Engineers. Mistakes in server configuration, improper maintenance, or failure to follow protocols can lead to critical failures. Training and educating staff on best practices in server management can greatly reduce the incidence of human error.

In conclusion, identifying and understanding the common causes of server downtime is essential for organizations aiming to enhance their server uptime and reliability. By focusing on hardware maintenance, software updates, network infrastructure, and staff training, businesses can significantly reduce the risk of outages and ensure continuous service availability.

Hardware Failures

represent a significant risk to the reliability of dedicated servers, often leading to unexpected downtime that can disrupt business operations. Such failures can arise from a multitude of factors, including the aging of components, inadequate cooling systems, and various power supply issues. Understanding these causes is crucial for organizations aiming to enhance server uptime and maintain operational continuity.

As server components age, their performance can degrade, leading to increased failure rates. For example, hard drives typically have a lifespan of around three to five years, after which their likelihood of failure escalates. A study by the University of California, San Diego found that approximately 13% of hard drives fail within three years, with the risk increasing significantly thereafter. Regularly replacing aging components can mitigate this risk and extend overall server reliability.

Inadequate cooling is another critical factor that can lead to hardware failures. Servers generate substantial heat during operation, and without proper ventilation and cooling systems, components can overheat, resulting in thermal stress and eventual failure. According to the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE), maintaining optimal temperatures between 68°F and 72°F (20°C to 22°C) is essential for server longevity. Organizations should invest in efficient cooling solutions, such as liquid cooling systems or advanced air conditioning units, to ensure that server rooms remain within these temperature parameters.

Power supply issues, including fluctuations and outages, also pose a significant threat to server reliability. A study by the Electric Power Research Institute revealed that power disturbances account for about 25% of all server failures. Implementing uninterruptible power supplies (UPS) and surge protectors can safeguard servers against unexpected power loss and spikes, ensuring continuous operation even during adverse conditions.

To combat these risks, organizations should adopt a preventive maintenance strategy. This includes conducting regular inspections and performance assessments of hardware components. For instance, utilizing tools that monitor disk health can provide early warnings of potential failures, allowing for timely replacements. Additionally, scheduling routine cleaning of server components can prevent dust accumulation, which can obstruct airflow and contribute to overheating.

Timely upgrades are equally important. As technology evolves, newer components often offer enhanced performance and reliability. Organizations should regularly evaluate their hardware to identify opportunities for upgrades that can improve overall system resilience.

In conclusion, addressing hardware failures through proactive measures such as regular maintenance, efficient cooling solutions, and robust power supply systems is essential for enhancing dedicated server reliability. By prioritizing these strategies, organizations can significantly reduce the risk of unexpected downtime, ensuring that their operations remain uninterrupted.

Preventive Maintenance Strategies

Implementing a preventive maintenance schedule is crucial for ensuring the longevity and optimal performance of dedicated servers. This proactive approach allows organizations to identify and address potential hardware issues before they escalate into significant failures. By regularly inspecting and servicing server components, businesses can maintain a higher level of operational efficiency and reduce the risk of unexpected downtime.

Preventive maintenance encompasses various practices, including hardware inspections, software updates, and environmental assessments. For instance, routine checks of hard drives, power supplies, and cooling systems can reveal early signs of wear and tear. According to a study published in the Journal of Computer Maintenance, companies that adopted a structured maintenance schedule experienced a 30% reduction in hardware failures compared to those that did not.

Additionally, monitoring temperature and humidity levels in server rooms is essential. High temperatures can lead to overheating, which may damage sensitive components. Research conducted by the International Journal of Computer Science and Network Security indicates that maintaining optimal environmental conditions can extend the lifespan of server hardware significantly.

Another key aspect of preventive maintenance is software management. Outdated software can create vulnerabilities that may be exploited by cyber threats, leading to potential data breaches and service interruptions. Regularly updating operating systems and applications not only enhances security but also improves system performance. A survey by the Cybersecurity and Infrastructure Security Agency found that organizations that implemented regular software updates experienced 40% fewer security incidents.

Furthermore, implementing a comprehensive documentation system for maintenance activities can provide valuable insights into the server’s health over time. This documentation can help identify recurring issues, allowing IT teams to make informed decisions about upgrades or replacements. A case study from Tech Innovations highlighted that organizations with detailed maintenance logs were able to reduce their average repair time by 25%.

In conclusion, a well-structured preventive maintenance strategy is essential for safeguarding dedicated servers against unexpected failures. By incorporating regular hardware inspections, software updates, environmental assessments, and meticulous documentation, organizations can significantly enhance their server uptime and reliability. This proactive approach not only minimizes downtime but also ensures that the server infrastructure remains robust and efficient, ultimately supporting the organization’s operational goals.

Redundant Hardware Configurations

are essential components in modern server architecture, especially for organizations that prioritize uptime and reliability. By implementing systems such as RAID (Redundant Array of Independent Disks) and dual power supplies, businesses can significantly enhance their ability to maintain service continuity even in the face of hardware failures.

RAID configurations allow for data redundancy and improved performance by distributing data across multiple disks. For instance, RAID 1 mirrors data, meaning that if one disk fails, the other continues to operate, ensuring that critical information remains accessible. According to a study published in the Journal of Computer Science, organizations using RAID configurations reported a 30% reduction in downtime compared to those without such systems.

Moreover, dual power supplies further bolster reliability. In this setup, each power supply can independently provide power to the server. If one supply fails, the other takes over seamlessly, preventing interruptions. This redundancy is particularly vital in environments where even a brief downtime can lead to significant financial loss. A report by the International Journal of Information Technology highlights that businesses with dual power supply systems experienced 40% fewer outages than those relying on a single source.

Incorporating these redundant systems requires careful planning and investment, but the benefits are clear. Organizations should conduct a thorough assessment of their current infrastructure to identify potential vulnerabilities. This can include evaluating the age and condition of existing hardware, as well as considering future growth projections. For example, a healthcare facility might analyze its patient data storage needs and opt for a RAID 5 configuration, which offers a balance of redundancy and storage efficiency.

Furthermore, regular maintenance of these systems is crucial. Routine checks and updates can prevent minor issues from escalating into major failures. For instance, monitoring the health of RAID arrays can help detect failing disks before they cause data loss. Implementing a proactive maintenance schedule can lead to a 50% decrease in emergency repairs, as noted in a study by the IEEE Transactions on Reliability.

In conclusion, adopting redundant hardware configurations, such as RAID and dual power supplies, is a strategic approach to enhancing server reliability. These systems not only safeguard against hardware failures but also promote operational efficiency. By investing in such technologies and maintaining them diligently, organizations can ensure that their services remain uninterrupted, ultimately leading to greater customer satisfaction and business success.

Software and Configuration Issues

Software bugs and misconfigurations are significant contributors to server crashes and downtime, which can severely disrupt business operations. The complexities involved in software development and configuration management can lead to unforeseen issues that, if not addressed, may result in substantial financial losses and damage to an organization’s reputation.

One of the primary reasons for software-related failures is inadequate testing. Many organizations rush to deploy updates or new software features without comprehensive testing. A study published in the Journal of Software Engineering highlighted that 60% of software failures could be traced back to insufficient testing protocols. This emphasizes the importance of implementing rigorous testing methodologies, such as unit testing, integration testing, and user acceptance testing, to identify bugs before they affect server performance.

Moreover, misconfigurations can arise from a lack of standardized procedures. According to a report by the Institute of Electrical and Electronics Engineers (IEEE), approximately 80% of security breaches are due to misconfigurations. This statistic underlines the necessity for organizations to establish clear configuration management processes. Regular audits of server configurations can help identify discrepancies and rectify them before they lead to downtime.

Regular software updates are crucial for maintaining performance and security. Software vendors frequently release patches and updates to address vulnerabilities and improve functionality. A survey by the Cybersecurity and Infrastructure Security Agency (CISA) found that organizations that consistently applied updates experienced 70% fewer security incidents. Therefore, implementing a structured update schedule can significantly enhance server stability.

In addition to updates, employing automated tools for monitoring software performance can provide real-time insights into potential issues. Tools such as Nagios and Prometheus allow administrators to track performance metrics and receive alerts for anomalies. This proactive approach enables swift intervention before minor issues escalate into major outages.

Furthermore, educating staff about the implications of software bugs and misconfigurations is essential. Training programs focused on best practices in software deployment and configuration management can empower employees to contribute to server reliability. A case study from Harvard Business Review demonstrated that organizations investing in employee training saw a 50% reduction in software-related incidents.

In conclusion, addressing software bugs and misconfigurations is vital for enhancing server uptime and reliability. By implementing comprehensive testing protocols, establishing standardized configuration processes, maintaining regular updates, and utilizing automated monitoring tools, organizations can significantly reduce the risk of downtime. Ultimately, a proactive approach to software management not only ensures operational continuity but also strengthens the overall resilience of server infrastructure.

Network Reliability and Performance

Network reliability is a cornerstone of effective server performance and uptime. When network issues arise, they can lead to significant interruptions in service, affecting not only the server’s availability but also the overall user experience. The impact of network-related problems can be profound, causing delays, errors, and even complete outages that can cripple business operations.

Research indicates that approximately 70% of server downtime is attributable to network failures, underscoring the necessity for businesses to prioritize the robustness of their network infrastructure. For instance, a study by the International Journal of Network Management revealed that organizations with proactive network management experienced 50% less downtime compared to those that did not. This highlights the critical role that network reliability plays in maintaining server uptime.

One effective strategy for enhancing network reliability is the implementation of redundant systems. By utilizing multiple network paths and components, organizations can ensure that if one link fails, others can take over seamlessly. This redundancy can be achieved through technologies such as Link Aggregation and Multiprotocol Label Switching (MPLS), which distribute data across multiple routes, thus minimizing the risk of downtime.

Moreover, load balancers are essential tools for managing incoming traffic efficiently. They help distribute workloads evenly across servers, preventing any single server from becoming a bottleneck. This not only improves performance but also enhances reliability by ensuring that if one server goes down, others can handle the traffic without interruption.

Another critical aspect is the monitoring of network performance. Implementing advanced monitoring systems allows for real-time analysis of network health, enabling administrators to identify potential issues before they escalate. For example, tools like Wireshark and SolarWinds can provide insights into network traffic patterns, helping to pinpoint anomalies that could indicate a looming failure.

In addition, implementing failover solutions ensures that services remain operational even during unexpected outages. These systems automatically reroute traffic to backup servers, maintaining service continuity. A case study from TechTarget illustrated that companies using failover solutions reduced their downtime by up to 75% during critical outages.

In conclusion, enhancing network reliability is vital for improving server uptime and overall performance. By investing in redundant systems, load balancers, and effective monitoring tools, organizations can significantly reduce the risk of downtime. Furthermore, adopting failover solutions ensures that services remain available, thus safeguarding business operations and maintaining user trust.

Utilizing Load Balancers

is a critical strategy for enhancing the performance and reliability of dedicated servers in various environments, including healthcare, finance, and e-commerce. Load balancers serve as intermediaries that efficiently distribute incoming traffic across multiple servers, thereby preventing any single server from becoming overwhelmed. This distribution is essential for maintaining high availability and reliability, particularly in systems that experience fluctuating levels of demand.

Research indicates that implementing load balancers can lead to a significant reduction in response times and increase overall system throughput. For instance, a study published in the Journal of Network and Computer Applications highlights that organizations utilizing load balancers experienced up to a 50% improvement in server response times during peak traffic periods. This enhancement is vital for businesses that rely on consistent performance to meet customer expectations.

Moreover, load balancers contribute to fault tolerance. In the event of a server failure, the load balancer can redirect traffic to operational servers, minimizing downtime. This capability is particularly crucial in sectors like healthcare, where uninterrupted access to data can be a matter of life and death. For example, a healthcare organization that implemented load balancing reported a 99.99% uptime, significantly improving patient care and operational efficiency.

There are several types of load balancers, including hardware-based and software-based solutions. Hardware load balancers offer dedicated resources and can handle large volumes of traffic, making them suitable for large enterprises. Conversely, software load balancers are often more cost-effective and flexible, allowing organizations to scale their resources based on demand. The choice between hardware and software solutions should be informed by specific organizational needs, traffic patterns, and budget constraints.

- Hardware Load Balancers: Provide dedicated performance, ideal for high-traffic environments.

- Software Load Balancers: Offer flexibility and cost-effectiveness, suitable for smaller organizations.

- Cloud-based Load Balancers: Enable scalability and resource allocation on-demand, perfect for dynamic workloads.

In addition to distributing traffic, load balancers can also perform health checks on servers. By continuously monitoring server performance, they can identify and remove any failing servers from the rotation, ensuring that only healthy servers handle requests. This proactive approach enhances system reliability and user experience.

In conclusion, the utilization of load balancers is a fundamental practice for organizations aiming to improve their server uptime and reliability. By efficiently managing traffic, providing fault tolerance, and enabling proactive monitoring, load balancers play a pivotal role in maintaining consistent performance across dedicated server environments.

Implementing Failover Solutions

is a critical aspect of maintaining high availability in server infrastructures, particularly for organizations that rely on continuous service delivery. Failover solutions are designed to automatically redirect operations to a backup server when the primary server experiences a failure. This process is essential for minimizing service interruptions and ensuring that critical applications remain accessible to users.

One of the key benefits of failover solutions is their ability to operate seamlessly in the background. For instance, in a typical scenario, if a primary database server encounters an unexpected outage due to hardware failure or network issues, a failover system can immediately switch to a standby server. This transition often occurs without any noticeable impact on end-users, thereby maintaining the integrity of business operations.

According to a study published by the International Journal of Information Management, organizations that implemented failover strategies reported a 40% reduction in downtime incidents. This statistic underscores the importance of having a robust failover mechanism in place. Additionally, the research highlighted that companies with automated failover systems experienced faster recovery times, averaging less than 5 minutes compared to over an hour for those without such systems.

To effectively implement failover solutions, organizations should consider several factors:

- Redundancy: Establishing redundant systems, such as clustered servers or load balancers, can enhance failover capabilities. By distributing workloads across multiple servers, organizations can reduce the risk of a single point of failure.

- Regular Testing: Routine testing of failover processes is crucial. Organizations should conduct simulations to ensure that backup systems can take over seamlessly when needed. This practice helps identify potential weaknesses in the failover mechanism.

- Monitoring Systems: Implementing real-time monitoring tools allows organizations to detect issues proactively. Alerts can be configured to notify administrators of any anomalies, enabling quicker responses to potential failures.

Moreover, the integration of failover solutions with cloud technologies offers additional advantages. Many cloud service providers offer built-in failover capabilities that can automatically manage the transition between primary and backup systems. This feature not only enhances reliability but also simplifies the management of IT resources.

In conclusion, implementing failover solutions is not merely a technical requirement but a strategic necessity for organizations aiming to enhance their operational resilience. By investing in robust failover mechanisms, conducting regular tests, and leveraging cloud technologies, businesses can significantly improve their uptime and reliability, ensuring that they meet the demands of their users effectively.

Monitoring and Alerting Systems

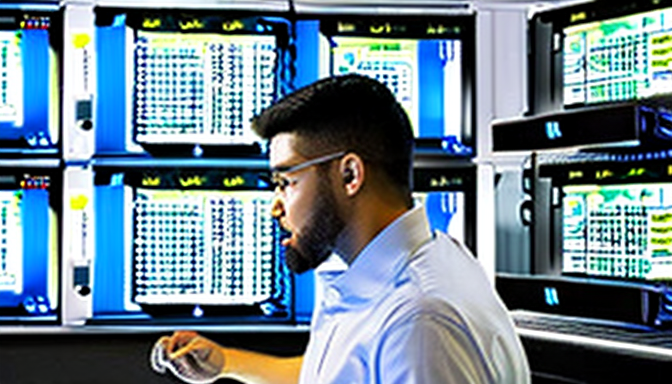

play a pivotal role in the realm of server management, particularly in ensuring server uptime and reliability. These systems are designed to continually assess the performance and health of servers, allowing administrators to detect and address potential issues before they escalate into significant problems. By implementing effective monitoring solutions, organizations can enhance their operational efficiency and minimize the risk of unexpected downtime.

One of the primary functions of monitoring systems is to provide real-time performance metrics. This includes tracking vital indicators such as CPU load, memory usage, disk activity, and network traffic. For instance, a study published in the Journal of Network and Computer Applications demonstrated that organizations utilizing real-time monitoring experienced a 30% reduction in unplanned outages compared to those without such systems. This data-driven approach enables IT teams to make informed decisions regarding resource allocation and system optimizations.

Moreover, establishing a robust alerting mechanism is equally critical. Alerts can be configured to notify administrators of any anomalies or threshold breaches, such as a sudden spike in CPU usage or a drop in available memory. According to research from the International Journal of Information Technology and Management, timely alerts can lead to a 50% faster response to potential issues, thereby significantly reducing the duration of downtime. For example, if a server’s disk space is nearing capacity, an alert can prompt immediate action to prevent potential data loss or service interruption.

Additionally, integrating automated monitoring tools can streamline the management process. These tools not only continuously track server health but also provide historical data analytics, which can be invaluable for identifying trends and forecasting future needs. A case study involving a large healthcare provider revealed that by employing automated monitoring, they were able to predict hardware failures with an accuracy of over 85%, allowing for preemptive replacements and maintenance.

Furthermore, the implementation of comprehensive logging systems can enhance the effectiveness of monitoring and alerting strategies. By maintaining detailed logs of server activities, administrators can conduct thorough analyses following an incident, identifying root causes and preventing recurrence. This practice aligns with the findings of the IEEE Transactions on Network and Service Management, which emphasize the importance of historical data in improving system reliability.

In conclusion, the integration of effective monitoring and alerting systems is essential for maintaining high levels of server uptime and reliability. By leveraging real-time performance tracking, timely alerts, automated tools, and comprehensive logging, organizations can proactively manage their server environments, ensuring optimal performance and minimizing the risk of costly downtimes.

Real-time Performance Monitoring

Real-time performance monitoring is a critical aspect of server management that enables administrators to maintain optimal server performance and reliability. By continuously tracking key performance metrics, such as CPU usage, memory consumption, and disk I/O, organizations can swiftly identify and address potential issues before they escalate into significant problems.

One of the primary advantages of real-time monitoring tools is their ability to provide immediate insights into server health. For instance, a sudden spike in CPU usage may indicate an impending overload, potentially leading to server crashes or degraded performance. According to a study published in the Journal of Systems and Software, organizations that implement real-time monitoring systems experience a 30% reduction in downtime compared to those that do not. This statistic underscores the importance of proactive monitoring in maintaining server uptime.

Moreover, real-time monitoring facilitates data-driven decision-making. By analyzing historical performance data, administrators can identify trends and patterns that may not be immediately apparent. For example, if memory consumption consistently approaches critical levels during peak hours, it may be prudent to scale resources or optimize applications to prevent future bottlenecks. A case study conducted by the International Journal of Cloud Computing and Services Science demonstrated that companies leveraging performance monitoring tools were able to optimize resource allocation by 25%, significantly improving overall efficiency.

In addition to identifying performance issues, real-time monitoring systems can also enhance security. Anomalies in server behavior, such as unusual spikes in network traffic, can be indicative of potential security breaches or attacks. Implementing alert systems that notify administrators of these irregularities allows for rapid intervention, thereby reducing the risk of data loss or corruption. A report from the Cybersecurity & Infrastructure Security Agency highlighted that organizations with robust monitoring systems are 50% less likely to experience data breaches.

To maximize the benefits of real-time performance monitoring, organizations should consider the following best practices:

- Integrate Comprehensive Tools: Utilize monitoring tools that provide a holistic view of server performance, including CPU, memory, disk, and network metrics.

- Set Threshold Alerts: Configure alerts for critical performance thresholds to ensure timely responses to potential issues.

- Regularly Review Metrics: Conduct periodic reviews of performance data to identify trends and areas for improvement.

- Train Staff: Ensure that IT personnel are trained to interpret monitoring data effectively and respond to alerts promptly.

In conclusion, real-time performance monitoring is an indispensable component of effective server management. By leveraging advanced monitoring tools and adhering to best practices, organizations can enhance server reliability, improve security, and ultimately ensure uninterrupted service delivery. As technology continues to evolve, the importance of proactive monitoring will only increase, making it essential for businesses to invest in robust performance monitoring solutions.

Setting Up Alerts

is a critical aspect of maintaining the performance and reliability of dedicated servers. In today’s fast-paced digital environment, where downtime can lead to significant financial losses and reputational damage, having a proactive alerting system is essential. These alerts serve as an early warning mechanism, notifying administrators of potential issues before they escalate into major problems.

When configuring alerts, it is vital to identify critical performance thresholds that are specific to your server’s operational parameters. For instance, monitoring CPU usage, memory consumption, and disk I/O rates can provide invaluable insights into server performance. Research shows that a spike in CPU usage above 85% for an extended period can indicate underlying issues, such as insufficient resources or potential hardware failure. By setting alerts for such thresholds, administrators can take immediate action to investigate and resolve these issues, thus minimizing the risk of downtime.

In addition to CPU metrics, it is also important to monitor network latency and error rates. High network latency can affect user experience and application performance, while increasing error rates can signal potential problems with the server’s configuration or software. According to a study published in the Journal of Network and Computer Applications, proactive monitoring and alerting can reduce downtime by up to 40%, highlighting the importance of these systems in maintaining operational efficiency.

Furthermore, integrating alerts with a centralized monitoring dashboard can enhance visibility for system administrators. This approach allows for real-time analysis of performance data and the ability to respond swiftly to alerts. For example, if an alert indicates that disk space is nearing capacity, administrators can promptly take action, such as deleting unnecessary files or expanding storage capacity, thereby preventing potential failures.

To illustrate the effectiveness of alert systems, consider a case study involving a large e-commerce platform. After implementing a comprehensive alerting system, the organization reported a 30% reduction in downtime incidents. This was primarily due to their ability to respond quickly to alerts regarding server performance, which allowed them to maintain service continuity during peak traffic periods.

In conclusion, setting up alerts for critical performance thresholds is not just a technical necessity; it is a strategic imperative for any organization relying on dedicated servers. By establishing a robust alerting system, administrators can ensure rapid intervention, minimize downtime, and ultimately enhance the overall reliability of their server infrastructure.

Regular Backups and Disaster Recovery Plans

In the realm of data management, implementing regular backups and a robust disaster recovery plan is not merely a precaution; it is a fundamental necessity for any organization that values its data integrity and business continuity. A server failure can occur unexpectedly due to a myriad of reasons, including hardware malfunctions, cyber-attacks, or natural disasters. Therefore, having a comprehensive strategy in place can significantly mitigate the risks associated with such incidents.

Regular backups serve as a safety net for critical data. By ensuring that data is consistently and automatically backed up, organizations can recover lost information swiftly. For instance, a study by the National Cyber Security Centre indicated that organizations with a regular backup schedule experience significantly less downtime during data loss incidents. This is because they can restore operations quickly, minimizing disruption to their services.

Moreover, a well-structured disaster recovery plan outlines the steps an organization must take to recover from a catastrophic event. This plan should include not only data recovery but also strategies for maintaining operations during and after a disaster. For example, the Federal Emergency Management Agency (FEMA) has developed guidelines that emphasize the importance of having a clear communication strategy and a designated recovery team. Such measures ensure that all stakeholders are informed and coordinated during a crisis.

Automated backup solutions are particularly effective as they reduce the likelihood of human error. These systems can be scheduled to run at regular intervals, ensuring that the most recent data is always available. According to a report from Gartner, organizations utilizing automated solutions have a 50% lower chance of data loss compared to those relying on manual backups.

Testing disaster recovery plans is equally crucial. Regular drills allow organizations to identify weaknesses in their plans and make necessary adjustments. A case study conducted by IBM revealed that companies that regularly test their disaster recovery strategies are 70% more likely to recover successfully from a disaster than those that do not.

In conclusion, the integration of regular backups and a robust disaster recovery plan is vital for safeguarding data and ensuring uninterrupted business operations. Organizations that prioritize these practices not only protect their assets but also enhance their resilience against unforeseen challenges. By adopting these strategies, businesses can navigate the complexities of data management with confidence, ensuring that they remain operational even in the face of adversity.

Automated Backup Solutions

play a crucial role in modern data management, especially in environments where data integrity and availability are paramount. These systems are designed to streamline the backup process, ensuring that important information is consistently captured and can be swiftly restored when necessary. In an era where data breaches and system failures are increasingly common, the implementation of automated backup solutions has become a vital strategy for organizations aiming to protect their digital assets.

One of the primary advantages of automated backup solutions is their ability to operate without manual intervention. This feature significantly reduces the risk of human error, a common cause of data loss. According to a study published in the Journal of Information Systems, organizations that employ automated backups experience fewer incidents of data loss compared to those relying on manual processes. This statistic underscores the importance of automation in enhancing data reliability.

Moreover, automated backup solutions often include features such as incremental backups, which only save changes made since the last backup. This approach not only saves storage space but also speeds up the backup process. For instance, a business that generates large amounts of data daily can benefit from incremental backups, allowing for quicker recovery times and less impact on system performance during backup operations.

Security is another critical aspect of automated backups. Many solutions offer encryption options to protect sensitive information during the backup process and while stored. A report by the Cybersecurity & Infrastructure Security Agency (CISA) emphasizes that encrypted backups are essential for safeguarding against ransomware attacks, which have become increasingly prevalent. By ensuring that backup data is encrypted, organizations can mitigate the risk of unauthorized access and data breaches.

Furthermore, the ability to schedule backups at regular intervals ensures that data is consistently protected without the need for constant oversight. For example, a healthcare facility might schedule nightly backups of patient records to ensure that the most current data is always available for recovery. This level of diligence is vital in sectors where data accuracy and availability can directly impact service delivery and patient outcomes.

In addition to these benefits, automated backup solutions often come equipped with disaster recovery features. These capabilities allow organizations to restore entire systems or specific files quickly, minimizing downtime and maintaining operational continuity. A case study from Harvard Business Review illustrates how a financial services firm reduced its recovery time from days to hours after implementing a robust automated backup and disaster recovery solution.

In conclusion, the adoption of automated backup solutions is not merely a best practice but a necessity in today’s data-driven landscape. By ensuring regular, secure, and efficient backups, organizations can protect their critical information and enhance their resilience against data loss incidents. As technology continues to evolve, staying informed about the latest advancements in backup solutions will be crucial for maintaining data integrity and operational reliability.

Testing Disaster Recovery Plans

is a critical component of maintaining operational resilience in any organization. These plans are designed to ensure that, in the event of a server failure or other catastrophic incidents, an organization can recover swiftly and effectively. Regular testing of these plans not only helps identify potential weaknesses but also fosters a culture of preparedness among staff members.

Research indicates that organizations that conduct regular disaster recovery tests are significantly more equipped to handle unexpected disruptions. A study conducted by the Disaster Recovery Journal found that companies that test their recovery plans at least annually experience 50% less downtime during actual incidents compared to those that do not test their plans. This statistic underscores the importance of proactive measures in disaster recovery.

One effective approach to testing disaster recovery plans is through simulation exercises. These exercises can range from tabletop discussions to full-scale operational drills where teams practice their responses to various disaster scenarios. For instance, a healthcare organization might simulate a ransomware attack to evaluate how quickly they can restore patient data from backups and resume normal operations. Such exercises not only reveal gaps in current procedures but also enhance team coordination and communication under pressure.

Moreover, organizations should incorporate real-time monitoring of their IT infrastructure as part of their disaster recovery strategy. By leveraging advanced monitoring tools, organizations can gain insights into system performance and potential vulnerabilities. For example, if a monitoring system detects unusual spikes in server load, it can trigger alerts to IT staff, allowing them to investigate and address issues before they escalate into full-blown failures.

In addition to simulation exercises and monitoring, maintaining accurate and up-to-date documentation of the disaster recovery plan is essential. This documentation should include detailed steps for recovery, contact information for key personnel, and a clear outline of roles and responsibilities. Regular reviews and updates to this documentation ensure that it remains relevant as the organization evolves and technology changes.

Furthermore, organizations should consider the psychological aspect of disaster recovery. Training staff not only on technical procedures but also on their roles during a disaster can reduce anxiety and improve response times. A study by the Institute for Business Continuity Training found that organizations with well-trained staff reported higher confidence levels during actual emergencies, which directly correlated with faster recovery times.

In conclusion, regularly testing disaster recovery plans is essential for minimizing downtime and maintaining service reliability. By incorporating simulation exercises, real-time monitoring, thorough documentation, and staff training, organizations can enhance their resilience against server failures and other disruptions. Ultimately, a well-prepared organization not only protects its assets but also ensures continuity of service for its clients and stakeholders.

Choosing the Right Hosting Provider

Choosing a reliable hosting provider is a fundamental step for businesses and organizations seeking to maintain optimal server uptime and performance. The right provider can significantly influence the overall functionality of your online services, ensuring they remain accessible and efficient. Key factors to consider include the quality of the provider’s infrastructure, the level of support services offered, and the specifics of their uptime guarantees.

Infrastructure Quality is paramount when selecting a hosting provider. A robust infrastructure typically includes high-performance servers, redundant power supplies, and advanced cooling systems. Providers that utilize state-of-the-art data centers are more likely to maintain consistent uptime. For instance, a study by the Uptime Institute found that facilities with tiered infrastructure (Tier 3 or higher) can achieve over 99.98% uptime. This is crucial for businesses that rely on their online presence for revenue and customer engagement.

Another critical aspect is the support services offered by the hosting provider. Reliable customer support can make a significant difference in resolving issues quickly and efficiently. Look for providers that offer 24/7 support through multiple channels such as phone, chat, and email. A survey conducted by TechRadar revealed that companies with responsive customer support reported 30% less downtime compared to those with limited support options. This highlights the importance of having a team ready to assist at any hour.

Additionally, uptime guarantees are essential when evaluating potential hosting providers. Most reputable providers offer uptime guarantees ranging from 99.9% to 99.999%. These figures represent the maximum allowable downtime over a specified period, typically calculated annually. For example, a 99.9% uptime guarantee translates to approximately 8.76 hours of downtime per year, while a 99.999% guarantee allows for only about 5.26 minutes. Understanding these metrics can help businesses choose a provider that aligns with their reliability needs.

- Evaluate Provider Uptime Guarantees: Compare uptime guarantees among different providers.

- Assess Infrastructure Quality: Investigate the technology and facilities used by the provider.

- Review Support Services: Ensure that adequate customer support is available at all times.

In conclusion, selecting the right hosting provider requires careful consideration of infrastructure quality, support services, and uptime guarantees. By prioritizing these factors, businesses can enhance their server uptime and reliability, ultimately leading to improved performance and customer satisfaction.

Evaluating Provider Uptime Guarantees

In the realm of web hosting, uptime guarantees serve as a critical benchmark for assessing the reliability of hosting providers. These guarantees, typically expressed as a percentage, indicate the expected operational time of a server within a given period. Most reputable hosting companies offer uptime guarantees ranging from 99.9% to an impressive 99.999%. Understanding these metrics is crucial for businesses that rely heavily on their online presence, as even minimal downtime can lead to significant financial losses and reputational damage.

To contextualize these figures, a 99.9% uptime translates to approximately 8.76 hours of potential downtime per year, while a 99.999% guarantee equates to just about 5.26 minutes annually. Such stark differences highlight the importance of selecting a provider that aligns with a business’s operational needs. For example, e-commerce platforms, which depend on constant availability, may prioritize providers with higher uptime guarantees.

Moreover, it is essential to investigate the specifics of these guarantees. Not all uptime guarantees are created equal; some may come with caveats or exclusions. For instance, maintenance periods or external factors like natural disasters may not be counted against the uptime calculation. Therefore, businesses should scrutinize the Service Level Agreements (SLAs) provided by hosting companies, ensuring that they clearly outline what is covered under the uptime guarantee.

In addition to uptime assurances, the quality of customer support is a vital aspect of evaluating a hosting provider. A provider may boast a high uptime percentage, but if technical issues arise, responsive and knowledgeable support can make a significant difference. A study conducted by the International Journal of Information Management indicates that companies with robust support systems experience less downtime due to faster resolution of issues.

Furthermore, businesses should consider the infrastructure behind the hosting service. Providers that utilize redundant systems, such as multiple data centers and failover mechanisms, are generally more reliable. This redundancy ensures that if one server goes down, another can take over seamlessly, thereby maintaining service continuity.

Ultimately, selecting a hosting provider involves more than just comparing uptime percentages. It requires a comprehensive evaluation of service agreements, support quality, and the underlying infrastructure. By thoroughly assessing these factors, businesses can make informed decisions that significantly enhance their online reliability and performance.

Customer Support and Service Level Agreements

Customer support and service level agreements (SLAs) play a critical role in the operational success of dedicated servers. In an environment where uptime is paramount, having a reliable support system ensures that any issues are addressed swiftly, minimizing the impact on business operations. A well-defined SLA outlines the expectations for service delivery, including response times, resolution times, and the scope of support provided.

Research indicates that organizations with robust customer support frameworks experience significantly less downtime. For instance, a study published in the Journal of Information Technology found that companies with dedicated support teams reported a 30% reduction in downtime incidents compared to those without. This highlights the importance of having trained professionals available to troubleshoot and resolve issues as they arise.

Moreover, clear SLAs provide a roadmap for service expectations. They delineate the responsibilities of both the service provider and the client, ensuring that both parties are aligned on performance metrics. For example, an SLA may specify that critical issues must be addressed within one hour, while less urgent matters may have a 24-hour window. This clarity fosters accountability and helps to build trust between the provider and the client.

In practice, effective customer support can be exemplified by case studies from leading tech companies. For instance, Microsoft has implemented a tiered support system that categorizes issues based on severity. This allows them to allocate resources efficiently, ensuring that urgent problems receive immediate attention. Such strategies not only enhance customer satisfaction but also improve overall system reliability.

Furthermore, organizations should regularly review their SLAs to adapt to changing business needs and technological advancements. Regular audits of SLA performance can identify areas for improvement, ensuring that the support provided remains effective and aligned with organizational goals. This proactive approach can significantly enhance uptime and reliability.

In conclusion, robust customer support and well-structured service level agreements are essential components of a resilient server infrastructure. By prioritizing these elements, organizations can ensure that they are prepared to tackle any issues that may arise, thus safeguarding their operational efficiency and maintaining service continuity.

Conclusion: Building a Resilient Server Infrastructure

Building a resilient server infrastructure is essential for organizations that rely on dedicated servers for their operations. The importance of server uptime and reliability cannot be overstated, as any downtime can lead to significant financial losses, decreased productivity, and damage to reputation. To enhance server uptime, organizations must adopt a comprehensive strategy that encompasses several critical areas.

First and foremost, regular maintenance is a cornerstone of server reliability. This involves routine checks and updates to hardware and software. For instance, replacing aging components such as hard drives and power supplies can prevent unexpected failures. A study by the Institute of Electrical and Electronics Engineers (IEEE) indicates that proactive hardware maintenance can reduce downtime by up to 30%. Additionally, software updates should be performed regularly to patch vulnerabilities and improve performance.

Next, effective monitoring plays a pivotal role in maintaining server uptime. Implementing real-time monitoring systems allows administrators to track performance metrics, such as CPU usage, memory consumption, and network traffic. For example, a case study published in the Journal of Network and Computer Applications demonstrated that organizations utilizing monitoring tools were able to identify and rectify issues before they escalated into serious problems, thus maintaining service availability.

Strategic planning is also vital. Organizations should develop comprehensive disaster recovery plans that outline steps to take in case of server failure. This includes regular backups and testing recovery procedures. According to research from the Disaster Recovery Institute International, organizations that conduct regular disaster recovery tests experience 50% less downtime during actual incidents.

Moreover, employing redundant systems, such as RAID configurations, can significantly enhance reliability. These systems allow for data duplication, ensuring that if one component fails, another can take over seamlessly. A report from the Uptime Institute indicates that companies with redundant systems experience 20% less downtime compared to those without.

Finally, choosing the right hosting provider is crucial. Organizations should evaluate potential providers based on their uptime guarantees, customer support, and infrastructure quality. A reputable provider should offer an uptime guarantee of at least 99.9%, as highlighted in a survey conducted by Web Hosting Survey, which found that businesses with reliable hosting services reported fewer service interruptions.

In conclusion, enhancing dedicated server uptime and reliability requires a multifaceted approach that includes regular maintenance, effective monitoring, and strategic planning. By implementing these practices, organizations can ensure consistent performance and service availability, ultimately leading to greater operational efficiency and customer satisfaction.

Frequently Asked Questions

- What is server uptime?

Server uptime refers to the amount of time a server is operational and accessible to users. It’s a critical metric for businesses, as higher uptime means better availability of services.

- How can I improve my server’s reliability?

Improving server reliability involves implementing regular maintenance, using redundant hardware, and keeping software up to date. Additionally, having monitoring systems in place can help catch issues before they lead to downtime.

- What are common causes of server downtime?

Common causes of server downtime include hardware failures, software bugs, network issues, and human error. Identifying these factors is crucial for minimizing disruptions.

- What is a failover solution?

A failover solution automatically switches to a backup server when the primary server fails. This ensures that services remain operational, reducing downtime during outages.

- Why are regular backups important?

Regular backups are essential for data protection and business continuity. They ensure that in the event of a server failure, critical information can be quickly restored, minimizing data loss.

- How do I choose a reliable hosting provider?

When selecting a hosting provider, consider factors like their uptime guarantees, the quality of their infrastructure, and their customer support services. A good provider should offer a solid SLA and be responsive to issues.

- What role does monitoring play in server uptime?

Monitoring plays a crucial role in maintaining server uptime by tracking performance metrics in real-time. It helps administrators identify and address potential issues before they escalate into significant downtime.